5. Redundancy with Tower¶

Note

Support for setting up a redundant environment is only available to those with Enterprise-level licenses.

Note

Prior to the Ansible Tower 2.4.5 release, the term High Availability had been used to describe Tower’s redundancy offering. As it is not a true HA solution, references to High Availability, highly-available, and HA have been removed in favor of using the terms redundacy and redundant, to help eliminate further confusion.

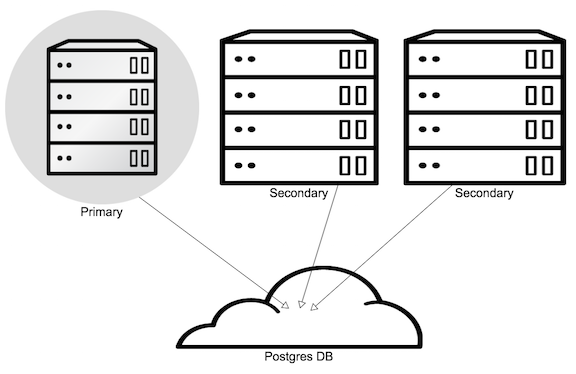

Tower installations can occur in a Redundant (active/passive) configuration. In this configuration, Tower runs with a single active node, called the Primary instance, and any number of inactive nodes, called Secondary instances. Tower Redundancy runs as a “passive standby” solution and jobs on Secondary instances must first have that instance promoted to Primary before jobs can be started (which is not an automatic process and must be user triggered).

Secondary instances can become Primary at any time, with certain caveats. Running in a redundant setup requires any database that Tower uses to be external–Postgres must be installed on a machine that is not one of the primary or secondary tower nodes.

Tower’s redundancy offers a standby Tower infrastructure that can become active in case of infrastructure failure–avoiding single points of failure. Redundancy is not meant to run in an active/active or multi-master mode, and is not a mechanism for horizontally scaling the Tower service. Remember that failover to a secondary instance is not automatic and must be user triggered.

For instructions on how to install into a redundant active/passive configuration, refer to the Ansible Tower Installation and Reference Guide.

Note

As of the release of Ansible Tower 3.0, the MongoDB is no longer packaged or used with Tower.

5.1. Setup Considerations¶

When creating a redundant deployment of Tower, consider the following factors:

Tower is designed as a unit.

Only those parts explicitly mentioned as being supported as external services are swappable for external versions of those services. Just as Tower does not support swapping out Django for Flask, Apache for lighttpd, or PostgreSQL for Oracle or MSSQL.

Tower servers need isolation.

If the primary and secondary Tower services share a physical host, a network, or potentially a data center, your infrastructure has a single point of failure. You should locate the Tower servers such that distribution occurs in a manner consistent with other services that you make available across your infrastructure. If your infrastructure is already using features such as Availability Zones in your cloud provider, having Tower distributed across Zones as well makes sense.

The database require replication.

If Tower runs in a redundancy setup, but the database is not run in a redundant or replicated mode, you still have a single point of failure for your Tower infrastructure. The Tower installer will not set up database replication; instead, it prompts for database connection details to an existing database (which needs replication).

Choose a database replication strategy that is appropriate for your deployment.

- For the general case of PostgreSQL, refer to the PostgreSQL documentation or the PostgreSQL Wiki.

- For deployments using Amazon’s RDS, refer to the Amazon documentation.

Tower instances must maintain reasonable connections to the database.

Tower both queries and writes to the database frequently; good locality between the Tower server and the database replicas is critical to ensure performance.

Source Control is necessary.

To use playbooks stored locally on the Tower server (rather than set to check out from source control), you must ensure synchronization between the primary and secondary Tower instances. Using playbooks in source control alleviates this problem.

When using SCM Projects, a best practices approach is setting the Update on Launch flag on the job template. This ensures that checkouts occur each time the playbook launches and that newly promoted secondary instances have up-to-date copies of the project content. When a secondary instance is promoted, a project_update for all SCM managed projects in the database is triggered. This provides Tower with copies of all project playbooks.

A consistent Tower hostname for clients and users.

Between Tower users’ habits, Tower provisioning callbacks, and Tower API integrations, keep the Tower hostname that users and clients use constant. In a redundant deployment, use a reverse proxy or a DNS CNAME. The CNAME is strongly preferred due to the websocket connection Tower uses for real-time output.

When in a redundant setup, the remote Postgres version requirements are Postgresql 9.4.x.

Postgresql 9.4.x is also required if Tower is running locally. With local setups, Tower handles the installation of these services. You should ensure that these are setup correctly if working in a remote setup.

For help allowing remote access to your Postgresql server, refer to: http://www.thegeekstuff.com/2014/02/enable-remote-postgresql-connection/

For example, a redundant configuration for an infrastructure consisting of three datacenters places a Tower server and a replicated database in each datacenter. Clients accessing Tower use a DNS CNAME which points to the address of the current primary Tower instance.

For information determining size requirements for your setup, refer to Requirements in the Ansible Tower Installation and Reference Guide.

Note

Instances have been reported where reusing the external DB during subsequent redundancy installations causes installation failures.

For example, say that you performed a redundant setup installation. Next, say that you needed to do this again and performed a second redundant setup installation reusing the same external database, only this subsequent installation failed.

When setting up an external redundant database which has been used in a prior installation, the HA database must be manually cleared before any additional installations can succeed.

5.2. Differences between Primary and Secondary Instances¶

The Tower service runs on both primary and secondary instances. The primary instance accepts requests or run jobs, while the secondary instances do not.

Connection attempts to the web interface or API of a secondary Tower server redirect to the primary Tower instance.

5.3. Post-Installation Changes to Primary Instances¶

When changing the configuration of a primary instance after installation, apply these changes to the secondary instances as well.

Examples of these changes would be:

Updates to

/etc/tower/conf.d/ha.pyIf you have configured LDAP or customized logging in

/etc/tower/conf.d/ldap.py, you will need to reflect these changes in/etc/tower/conf.d/ldap.pyon your secondary instances as well.Updating the Tower license

Any secondary instance of Tower requires a valid license to run properly when promoted to a primary instance. Copy the license from the primary node at any time or install it via the normal license installation mechanism after the instance promotes to primary status.

Note

Users of older versions of Tower should update /etc/tower/settings.py instead of files within /etc/tower/conf.d/.

5.4. Examining the redundancy configuration of Tower¶

To see the redundancy configuration of Tower, you can query the ping

endpoint of the Tower REST API. To do this via Tower’s built in API

browser, go to https://<Tower server name>/api/v1/ping/. You can go

to this specific URL on either the primary or secondary nodes.

An example return from this API call would be (in JSON format):

HTTP 200 OK

Content-Type: application/json

Vary: Accept

Allow: GET, HEAD, OPTIONS

X-API-Time: 0.008s

{

"instances": {

"primary": "192.168.122.158",

"secondaries": [

"192.168.122.109",

"192.168.122.26"

]

},

"ha": true,

"role": "primary",

"version": "2.1.4"

}

It contains the following fields.

- Instances

- Primary: The primary Tower instance (hostname or IP address)

- Secondaries: The secondary Tower instances (hostname or IP address)

- HA: Whether Tower is running in active/passive redundancy mode

- Role: Whether this specific instance is a primary or secondary

- Version: The Tower version in use

5.5. Promoting a Secondary Instance/Failover¶

To promote a secondary instance to be the new primary instance (also

known as initiating failover), use the tower_manage command.

To make a running secondary node the primary node, log on to the desired

new primary node and run the update_instance command of tower_manage as follows:

root@localhost:~$ tower-manage update_instance --primary

Successfully updated instance role (uuid="ec2dc2ac-7c4b-9b7e-b01f-0b7c30d0b0ab",hostname="localhost",role="primary")

The current primary instance changes to be a secondary.

Note

Secondary nodes need a valid Tower license at /etc/tower/license to function as a proper primary instance. Copy the license from the primary node at any time or install it via the normal license installation mechanism after the instance promotes to primary status.

On failover, queued or running jobs in the database are marked as failed.

Tower does not attempt any health checks between primary or secondary nodes to do automatic failover in case of the loss of the primary node. You should use an external monitoring or heartbeat tool combined with tower_manage for these system health checks. Use of the /ping API endpoint could help.

Caution

If you are attempting to replicate across regions or other geographically separated locations, creating an HA environment will take a great deal of customization. Tower’s HA is not meant to do full replication, as it is not written to be fully available globally. If attempting this type of replication, you should keep in mind the following:

- The

stdoutfor your job history is not stored in the database but on the file system. - Replicating the database does not provide you with all of your history, only highlights of important history moments.

- Projects folders should be reviewed as role dependencies may have placed the roles there or in other locations.

- Creating snapshots of VMs and backups may offer you more with less customization, allowing you to relaunch your Tower instance more easily (refer to Backing Up and Restoring Tower for more information).

5.6. Decommissioning Secondary instances¶

You cannot decommission a current primary Tower instance without first selecting a new primary.

If you need to decommission a secondary instance of Tower, log onto the

secondary node and run the remove_instance command of

tower_manage as follows:

root@localhost:~$ tower-manage remove_instance --hostname tower2.example.com

Instance removed (changed: True).

Replace tower2.example.com with the registered name (IP address or

hostname) of the Tower instance you are removing from the list of

secondaries.

You can then shutdown the Tower service on the decommissioned secondary node.