3. 升级至 Execution Environments¶

如果从旧版本的 automation controller 升级到 4.0 或更高版本,控制器可以检测到与机构、清单和作业模板关联的虚拟环境的以前版本,并通知您需要将它们迁移到新的 execution environment 模型。automation controller 的一个全新安装会在安装过程中创建两个 virtualenv:一个用于运行控制器本身,另一个用于运行 Ansible。与旧的虚拟环境一样,execution environments 允许控制器在一个稳定的环境中运行,同时允许您根据需要在 execution environment 中添加或更新模块以运行 playbook。如需更多信息,请参阅 Automation Controller User Guide 中的 Execution Environments。

3.1. 将旧的 venv 迁移到 execution environments¶

通过将之前自定义虚拟环境中的设置迁移到新的 execution environment,您在 execution environment 中会有一个和以前一样的设置。使用本节中的 awx-manage 命令:

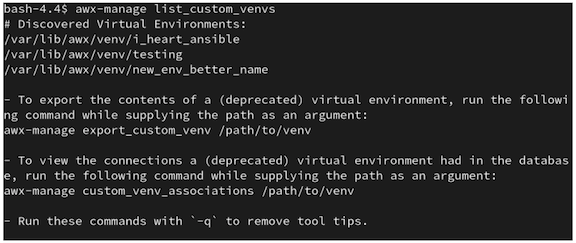

所有当前自定义虚拟环境及其路径列表(

list_custom_venvs)查看依赖特定自定义虚拟环境的资源(

custom_venv_associations)将特定的自定义虚拟环境导出为可用于迁移到 execution environment 的格式(

export_custom_venv)

在迁移前,建议您使用

awx-manage list命令查看所有当前运行的自定义虚拟环境:

$ awx-manage list_custom_venvs

以下是运行这个命令时的输出示例:

以上输出显示了三个自定义虚拟环境及其路径。如果您有一个没有位于默认 /var/lib/awx/venv/ 目录路径中的自定义虚拟环境,则它不会包含在这个输出中。

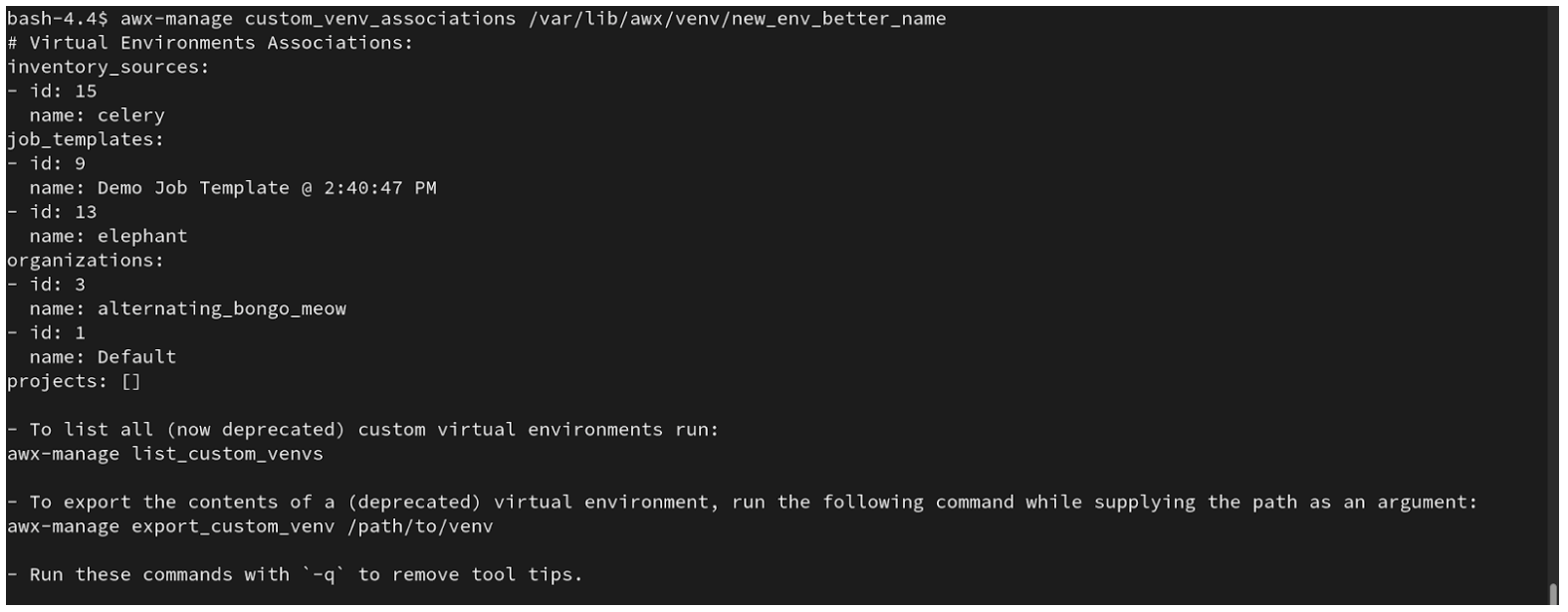

使用

_associations命令查看自定义虚拟环境关联的机构、作业和清单源,以确定哪些资源依赖它们:

$ awx-manage custom_venv_associations /this/is/the/path/

以下是运行这个命令时的输出示例:

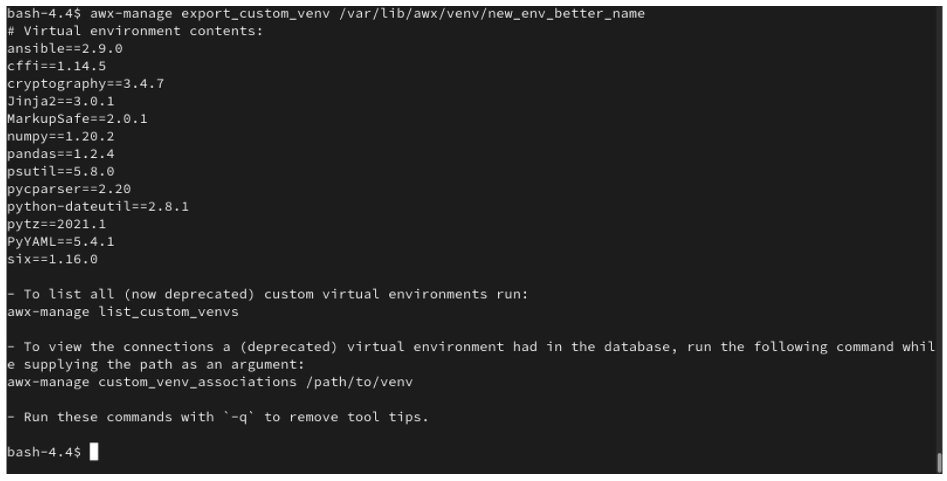

为您要迁移的虚拟环境选择一个路径,并在

awx-manage export命令中指定:

$ awx-manage export_custom_venv /this/is/the/path/

生成的输出实际上就是执行 pip freeze 命令的结果。示例显示了所选自定义虚拟环境的内容:

注解

这些命令都可使用 -q 选项运行,该选项会删除每个输出中提供的指导性内容。

现在,您已拥有这个 pip freeze 数据的输出,您可以将这些数据粘贴到一个定义文件中,该文件可以使用 ansible-builder 来启动您的新 execution environment。任何人(包括普通用户和管理员)都可以使用 ansible-builder 创建一个 execution environment。详情请查看 Automation Controller User Guide 中的 构建一个 Execution Environment。

3.2. Migrate isolated instances to execution nodes¶

The move from isolated instance groups to execution nodes enables inbound or outbound connections. Contrast this with versions 3.8 and older where only outbound connections were allowed from controller nodes to isolated nodes.

Migrating legacy isolated instance groups to execution nodes in order to function properly in the automation controller mesh architecture in 4.1, is a preflight function of the installer that essentially creates an inventory file based on your old file. Even though both .ini and .yml files are still accepted formats, the generated file output is only an .ini file at this time.

The preflight check leverages Ansible; and Ansible flattens the concept of children, this means that not every single inventory file can be replicated exactly, but it is very close. It will be functionally the same to Ansible, but may look different to you. The automated preflight processing does its best to create child relationships based on heuristics, but be aware that the tool lacks the nuance and judgment that human users have. Therefore, once the file is created, do NOT use it as-is. Check the file over and use it as a template to ensure that they work well for both you and the Ansible engine.

Here is an example of a before and after preflight check, demonstrating how Ansible flattens an inventory file and how the installer reconstructs a new inventory file. To Ansible, both of these files are essentially the same.

Old style (from Ansible docs) |

New style (generated by installer) |

|---|---|

[tower]

localhost ansible_connection=local

[database]

[all:vars]

admin_password='******'

pg_host=''

pg_port=''

pg_database='awx'

pg_username='awx'

pg_password='******'

rabbitmq_port=5672

rabbitmq_vhost=tower

rabbitmq_username=tower

rabbitmq_password='******'

rabbitmq_cookie=cookiemonster

# Needs to be true for fqdns and ip addresses

rabbitmq_use_long_name=false

[isolated_group_restrictedzone]

isolated-node.c.towertest-188910.internal

[isolated_group_restrictedzone:vars]

controller=tower

|

[all:vars]

admin_password='******'

pg_host=''

pg_port=''

pg_database='awx'

pg_username='awx'

pg_password='******'

rabbitmq_port=5672

rabbitmq_vhost='tower'

rabbitmq_username='tower'

rabbitmq_password='******'

rabbitmq_cookie='cookiemonster'

rabbitmq_use_long_name='false'

# In AAP 2.X [tower] has been renamed to [automationcontroller]

# Nodes in [automationcontroller] will be hybrid by default, capable of executing user jobs.

# To specify that any of these nodes should be control-only instead, give them a host var of `node_type=control`

[automationcontroller]

localhost

[automationcontroller:vars]

# in AAP 2.X the controller variable has been replaced with `peers`

# which allows finer grained control over node communication.

# `peers` can be set on individual hosts, to a combination of multiple groups and hosts.

peers='instance_group_restrictedzone'

ansible_connection='local'

# in AAP 2.X isolated groups are no longer a special type, and should be renamed to be instance groups

[instance_group_restrictedzone]

isolated-node.c.towertest-188910.internal

[instance_group_restrictedzone:vars]

# in AAP 2.X Isolated Nodes are converted into Execution Nodes using node_state=iso_migrate

node_state='iso_migrate'

# In AAP 2.X Execution Nodes have replaced isolated nodes. All of these nodes will be by default

# `node_type=execution`. You can specify new nodes that cannot execute jobs and are intermediaries

# between your control and execution nodes by adding them to [execution_nodes] and setting a host var

# `node_type=hop` on them.

[execution_nodes]

[execution_nodes:children]

instance_group_restrictedzone

|

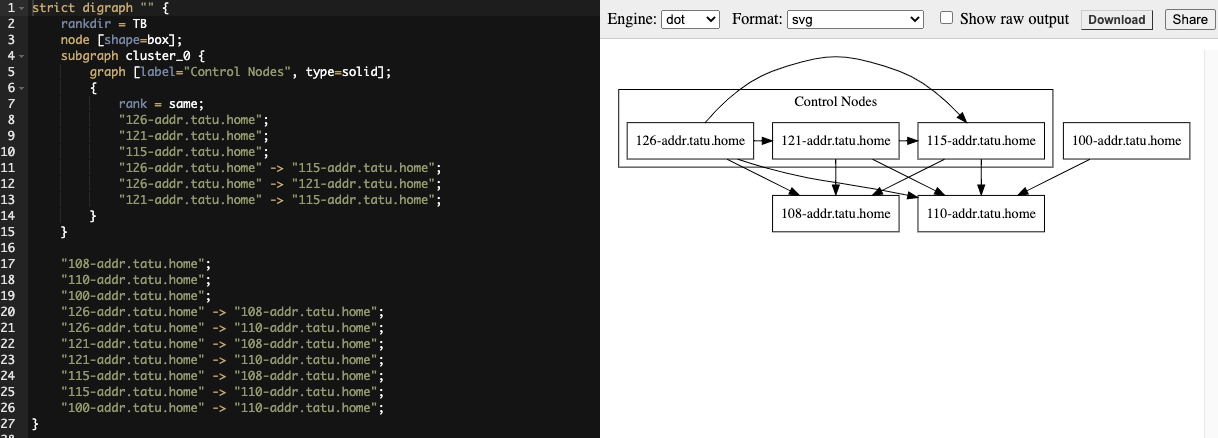

3.3. View mesh topology¶

If you configured a mesh topology, the installer can graphically validate your mesh configuration through a generated graph rendering tool. The graph is generated by reading the contents of the inventory file. See the Red Hat Ansible Automation Platform automation mesh guide for further detail.

Any given inventory file must include some sort of execution capacity that is governed by at least one control node. That is, it is unacceptable to produce an inventory file that only contains control-only nodes, execution-only nodes or hop-only nodes. There is a tightly coupled relationship between control and execution nodes that must be respected at all times. The installer will fail if the inventory files aren't properly defined. The only exception to this rule would be a single hybrid node, as it will satisfy the control and execution constraints.

In order to run jobs on an execution node, either the installer needs to pre-register the node, or user needs to make a PATCH request to /api/v2/instances/N/ to change the enabled field to true.