3. Installing Ansible Tower¶

Tower can be installed in various ways by choosing the best mode for your environment and making any necessary modifications to the inventory file. For OpenShift-based deployments, refer to OpenShift Deployment and Configuration.

3.1. Tower Installation Scenarios¶

Tower can be installed using one of the following scenarios:

Single Machine:

- As an integrated installation:

This is a single machine install of Tower - the web frontend, REST API backend, and database are all on a single machine. This is the standard installation of Tower. It also installs PostgreSQL from your OS vendor repository, and configures the Tower service to use that as its database.

- With an external database (2 options available):

Tower with remote DB configuration: This installs the Tower server on a single machine and configures it to talk to a remote instance of PostgreSQL 9.6 as its database. This remote PostgreSQL can be a server you manage, or can be provided by a cloud service such as Amazon RDS.

Tower with a playbook install of a remote Postgres system: This installs the Tower server on a single machine and installs a remote Postgres database via the playbook installer (managed by Tower).

Note

1). Tower will not configure replication or failover for the database that it uses, although Tower should work with any replication that you have. 2). The database server should be on the same network or in the same datacenter as the Tower server for performance reasons.

High Availability Multi-Machine Cluster:

Tower can be installed in a high availability cluster mode. In this mode, multiple Tower nodes are installed and active. Any node can receive HTTP requests and all nodes can execute jobs.

- A Clustered Tower setup must be installed with an external database (2 options available):

Tower with remote DB configuration: This installs the Tower server on a single machine and configures it to talk to a remote instance of PostgreSQL as its database. This remote PostgreSQL can be a server you manage, or can be provided by a cloud service such as Amazon RDS.

Tower with a playbook install of a remote Postgres system: This installs the Tower server on a single machine and installs a remote Postgres database via the playbook installer (managed by Tower).

For more information on configuring a clustered setup, refer to Clustering.

Note

Running in a cluster setup requires any database that Tower uses to be external–Postgres must be installed on a machine that is not one of the primary or secondary tower nodes. When in a redundant setup, the remote Postgres version requirements is PostgreSQL 9.6.

3.2. Setting up the Inventory File¶

As you edit your inventory file, there are a few things you must keep in mind:

The contents of the inventory file should be defined in

./inventory, next to the./setup.shinstaller playbook.For installations and upgrades: If you need to make use of external databases, you must ensure the database sections of your inventory file are properly setup. Edit this file and add your external database information before running the setup script.

For upgrading an existing cluster: When upgrading a cluster, you may decide that you want to also reconfigure your cluster to omit existing instances or instance groups. Omitting the instance or the instance group from the inventory file will not be enough to remove them from the cluster. In addition to omitting instances or instance groups from the inventory file, you must also deprovision instances or instance groups before starting the upgrade. Otherwise, omitted instances or instance groups will continue to communicate with the cluster, which can cause issues with tower services during the upgrade.

For clustered installations: If you are creating a clustered setup, you must replace

localhostwith the hostname or IP address of all instances. All nodes/instances must be able to reach any others using this hostname or address. In other words, you cannot use thelocalhost ansible_connection=localon one of the nodes AND all of the nodes should use the same format for the host names.Therefore, this will not work:

[tower] localhost ansible_connection=local hostA hostB.example.com 172.27.0.4

Instead, use these formats:

[tower] hostA hostB hostC

OR

hostA.example.com hostB.example.com hostC.example.com

OR

[tower] 172.27.0.2 172.27.0.3 172.27.0.4

For all standard installations: When performing an installation, you must supply any necessary passwords in the inventory file.

Note

Changes made to the installation process now require that you fill out all of the password fields in the inventory file. If you need to know where to find the values for these they should be:

admin_password=''<— Tower local admin password

pg_password=''<—- Found in /etc/tower/conf.d/postgres.py

rabbitmq_password=''<—- create a new password here (alpha-numeric with no special characters)

Example Inventory file

For provisioning new nodes: When provisioning new nodes add the nodes to the inventory file with all current nodes, make sure all passwords are included in the inventory file.

For upgrading a single node: When upgrading, be sure to compare your inventory file to the current release version. It is recommended that you keep the passwords in here even when performing an upgrade.

Example Single Node Inventory File

[tower]

localhost ansible_connection=local

[database]

[all:vars]

admin_password='password'

pg_host=''

pg_port=''

pg_database='awx'

pg_username='awx'

pg_password='password'

rabbitmq_port=5672

rabbitmq_username=tower

rabbitmq_password='password'

rabbitmq_cookie=rabbitmqcookie

# Needs to be true for fqdns and ip addresses

rabbitmq_use_long_name=false

# Needs to remain false if you are using localhost

Example Multi Node Cluster Inventory File

[tower]

clusternode1.example.com

clusternode2.example.com

clusternode3.example.com

[database]

dbnode.example.com

[all:vars]

ansible_become=true

admin_password='password'

pg_host='dbnode.example.com'

pg_port='5432'

pg_database='tower'

pg_username='tower'

pg_password='password'

rabbitmq_port=5672

rabbitmq_username=tower

rabbitmq_password=tower

rabbitmq_cookie=rabbitmqcookie

# Needs to be true for fqdns and ip addresses

rabbitmq_use_long_name=true

Example Inventory file for an external existing database

[tower]

node.example.com ansible_connection=local

[database]

[all:vars]

admin_password='password'

pg_password='password'

rabbitmq_password='password'

pg_host='database.example.com'

pg_port='5432'

pg_database='awx'

pg_username='awx'

Example Inventory file for external database which needs installation

[tower]

node.example.com ansible_connection=local

[database]

database.example.com

[all:vars]

admin_password='password'

pg_password='password'

rabbitmq_password='password'

pg_host='database.example.com'

pg_port='5432'

pg_database='awx'

pg_username='awx'

Once any necessary changes have been made, you are ready to run ./setup.sh.

Note

Root access to the remote machines is required. With Ansible, this can be achieved in different ways:

ansible_user=root ansible_ssh_pass=”your_password_here” inventory host or group variables

ansible_user=root ansible_ssh_private_key_file=”path_to_your_keyfile.pem” inventory host or group variables

ANSIBLE_BECOME_METHOD=’sudo’ ANSIBLE_BECOME=True ./setup.sh

ANSIBLE_SUDO=True ./setup.sh (Only applies to Ansible 2.7)

The DEFAULT_SUDO Ansible configuration parameter was removed in Ansible 2.8, which causes the ANSIBLE_SUDO=True ./setup.sh method of privilege escalation to no longer work. For more information on become plugins, refer to Understanding Privilege Escalation and the list of become plugins.

3.3. The Setup Playbook¶

Note

Ansible Tower 3.0 simplifies installation and removes the need to run ./configure/ as part of the installation setup. Users of older versions should follow the instructions available in the v.2.4.5 (or earlier) releases of the Tower Documentation available at:

http://docs.ansible.com/

The Tower setup playbook script uses the inventory file and is invoked as ./setup.sh from the path where you unpacked the Tower installer tarball.

root@localhost:~$ ./setup.sh

The setup script takes the following arguments:

-h– Show this help message and exit-i INVENTORY_FILE– Path to Ansible inventory file (default:inventory)-e EXTRA_VARS– Set additional Ansible variables as key=value or YAML/JSON (i.e.-e bundle_install=falseforces an online installation)-b– Perform a database backup in lieu of installing-r– Perform a database restore in lieu of installing (a default restore path is used unless EXTRA_VARS are provided with a non-default path, as shown in the code example below)

./setup.sh -e 'restore_backup_file=/path/to/nondefault/location' -r

Note

Please note that a issue was discovered in Tower 3.0.0 and 3.0.1 that prevented proper system backups and restorations.

If you need to back up or restore your Tower v3.0.0 or v3.0.1 installation, use the v3.0.2 installer to do so.

After calling ./setup.sh with the appropriate parameters, Tower is installed on the appropriate machines as has been configured. Setup installs Tower from RPM or Deb packages using repositories hosted on ansible.com.

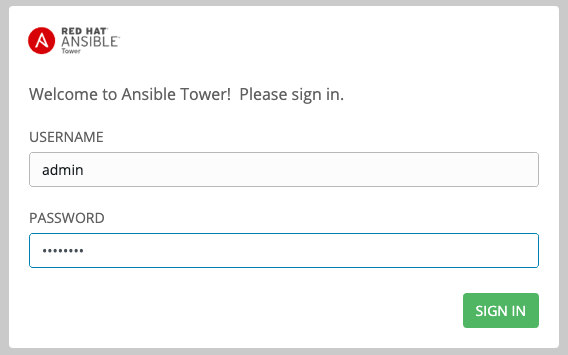

Once setup is complete, use your web browser to access the Tower server and view the Tower login screen. Your Tower server is accessible from port 80 (https://<TOWER_SERVER_NAME>/) but will redirect to port 443 so 443 needs to be available also.

If the installation of Tower fails and you are a customer who has purchased a valid license for Ansible Tower, please contact Ansible via the Red Hat Customer portal at https://access.redhat.com/.

3.4. Changing the Password¶

Once installed, if you log into the Tower instance via SSH, the default admin password is provided in the prompt. You can then change it with the following command (as root or as AWX user):

awx-manage changepassword admin

After that, the password you have entered will work as the admin password in the web UI.