3. Execution Environments へのアップグレード¶

古いバージョンの automation controller から 4.0 以降にアップグレードする場合、コントローラーは組織、インベントリー、およびジョブテンプレートに関連付けられた以前のバージョンの仮想環境を検出することができ、新規の execution environment モデルに移行する必要があることを知らせます。automation controller の新規インストールは、インストール時に 2 つの virtualenv を作成します。その 1 つはコントローラー自身の実行に使用され、もう 1 つは Ansible の実行に使用されます。従来の仮想環境と同様に、execution environments を使用すると、コントローラーが安定した環境で実行でき、必要に応じて execution environment にモジュールを追加または更新して Playbook を実行することができます。詳細は Automation Controller User Guide の Execution Environments を参照してください。

重要

When upgrading, it is highly recommended to always rebuild on top of the base execution environment that corresponds to the platform version you are using. See Execution Environment のビルド for more information.

3.1. レガシー virtualenv を execution environments に移行する¶

新しい execution environment にセットアップを移行することで、以前のカスタムの仮想環境とまったく同じセットアップを execution environment に設定することができます。このセクションの awx-manage コマンドを使用して、以下を実行します。

現在のすべてのカスタムの仮想環境とそのパスの一覧 (

list_custom_venvs)特定のカスタムの仮想環境に依存するリソースの表示 (

custom_venv_associations)特定のカスタムの仮想環境の execution environment (

export_custom_venv) への移行に使用可能な形式へのエクスポート

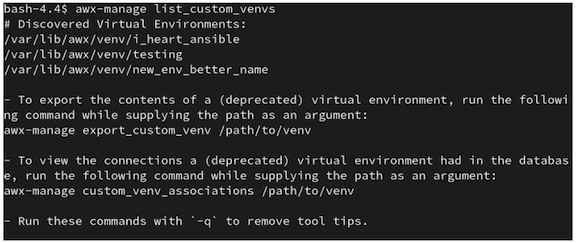

Before you migrate, it is recommended that you view all the custom virtual environments you currently have running:

$ awx-manage list_custom_venvs

以下は、このコマンドの出力例です。

この出力には、3 つのカスタムの仮想環境とそのパスが表示されます。デフォルトの /var/lib/awx/venv/ ディレクトリーパス内にないカスタムの仮想環境がある場合は、ここには含まれません。

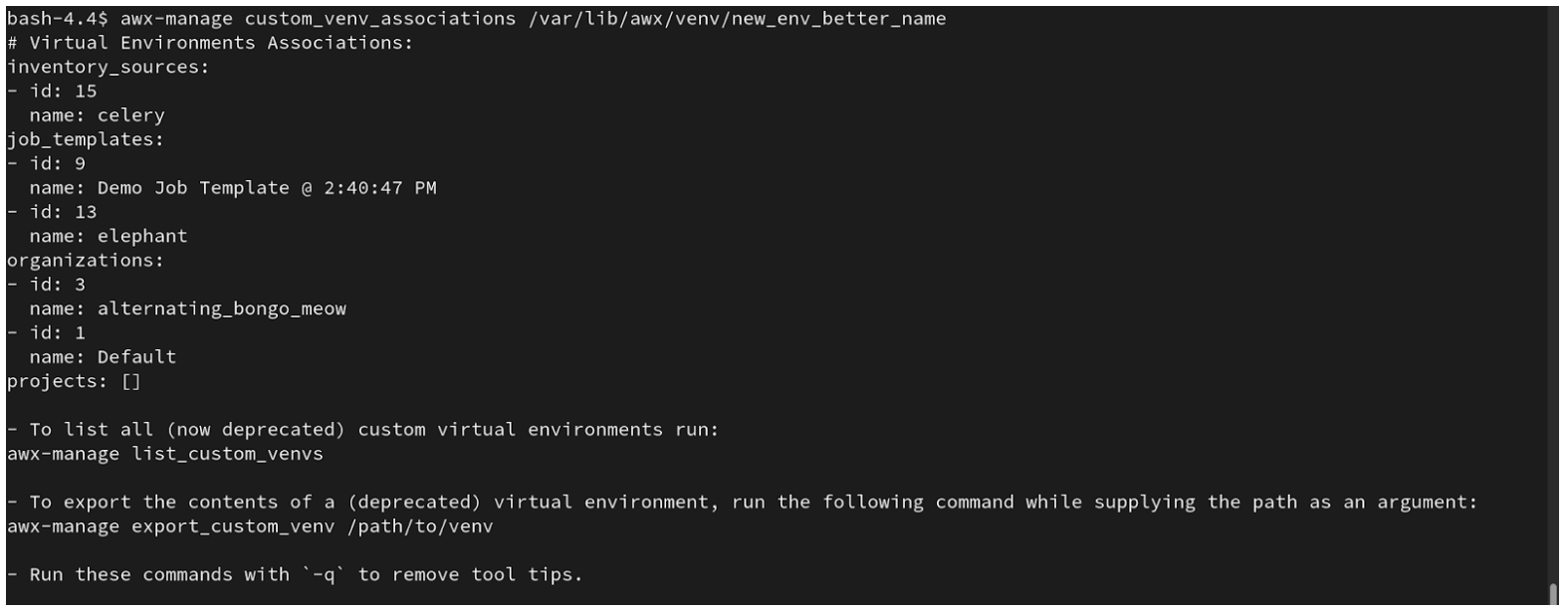

_associationsコマンドを使用して、カスタムの仮想環境が関連付けられている組織、ジョブ、およびインベントリーソースを表示し、それらに依存しているリソースを判別します。

$ awx-manage custom_venv_associations /this/is/the/path/

以下は、このコマンドの出力例です。

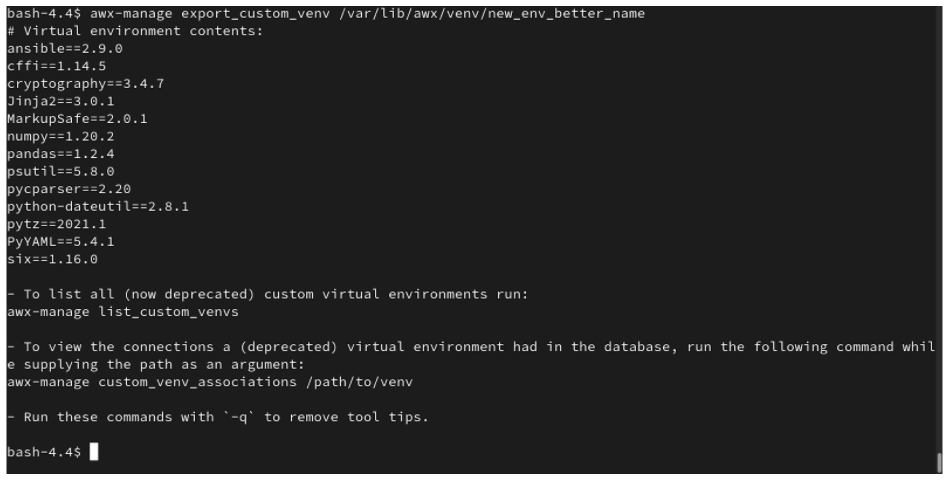

Select a path for the virtual environment that you want to migrate and specify it in the

awx-manage export_custom_venvcommand:

$ awx-manage export_custom_venv /this/is/the/path/

その結果の出力は基本的に pip freeze コマンドの実行の結果になります。以下の例は、選択したカスタム仮想環境のコンテンツを示しています。

注釈

これらのコマンドはすべて、-q オプションで実行可能です。このオプションは、各出力で提供される説明コンテンツを削除します。

この pip freeze データからの出力が得られたので、ansible-builder を使用して新しい execution environment を起動するために使用できる定義ファイルに貼り付けることができます。誰でも (通常ユーザーと管理者の両方) ansible-builder を使用して execution environment を作成できます。詳細は Automation Controller User Guide の Execution Environment のビルド を参照してください。

3.2. Migrate isolated instances to execution nodes¶

The move from isolated instance groups to execution nodes enables inbound or outbound connections. Contrast this with versions 3.8 and older where only outbound connections were allowed from controller nodes to isolated nodes.

Migrating legacy isolated instance groups to execution nodes in order to function properly in the automation controller mesh architecture in 4.1, is a preflight function of the installer that essentially creates an inventory file based on your old file. Even though both .ini and .yml files are still accepted formats, the generated file output is only an .ini file at this time.

The preflight check leverages Ansible; and Ansible flattens the concept of children, this means that not every single inventory file can be replicated exactly, but it is very close. It will be functionally the same to Ansible, but may look different to you. The automated preflight processing does its best to create child relationships based on heuristics, but be aware that the tool lacks the nuance and judgment that human users have. Therefore, once the file is created, do NOT use it as-is. Check the file over and use it as a template to ensure that they work well for both you and the Ansible engine.

Here is an example of a before and after preflight check, demonstrating how Ansible flattens an inventory file and how the installer reconstructs a new inventory file. To Ansible, both of these files are essentially the same.

Old style (from Ansible docs) |

New style (generated by installer) |

|---|---|

[tower]

localhost ansible_connection=local

[database]

[all:vars]

admin_password='******'

pg_host=''

pg_port=''

pg_database='awx'

pg_username='awx'

pg_password='******'

rabbitmq_port=5672

rabbitmq_vhost=tower

rabbitmq_username=tower

rabbitmq_password='******'

rabbitmq_cookie=cookiemonster

# Needs to be true for fqdns and ip addresses

rabbitmq_use_long_name=false

[isolated_group_restrictedzone]

isolated-node.c.towertest-188910.internal

[isolated_group_restrictedzone:vars]

controller=tower

|

[all:vars]

admin_password='******'

pg_host=''

pg_port=''

pg_database='awx'

pg_username='awx'

pg_password='******'

rabbitmq_port=5672

rabbitmq_vhost='tower'

rabbitmq_username='tower'

rabbitmq_password='******'

rabbitmq_cookie='cookiemonster'

rabbitmq_use_long_name='false'

# In AAP 2.X [tower] has been renamed to [automationcontroller]

# Nodes in [automationcontroller] will be hybrid by default, capable of executing user jobs.

# To specify that any of these nodes should be control-only instead, give them a host var of `node_type=control`

[automationcontroller]

localhost

[automationcontroller:vars]

# in AAP 2.X the controller variable has been replaced with `peers`

# which allows finer grained control over node communication.

# `peers` can be set on individual hosts, to a combination of multiple groups and hosts.

peers='instance_group_restrictedzone'

ansible_connection='local'

# in AAP 2.X isolated groups are no longer a special type, and should be renamed to be instance groups

[instance_group_restrictedzone]

isolated-node.c.towertest-188910.internal

[instance_group_restrictedzone:vars]

# in AAP 2.X Isolated Nodes are converted into Execution Nodes using node_state=iso_migrate

node_state='iso_migrate'

# In AAP 2.X Execution Nodes have replaced isolated nodes. All of these nodes will be by default

# `node_type=execution`. You can specify new nodes that cannot execute jobs and are intermediaries

# between your control and execution nodes by adding them to [execution_nodes] and setting a host var

# `node_type=hop` on them.

[execution_nodes]

[execution_nodes:children]

instance_group_restrictedzone

|

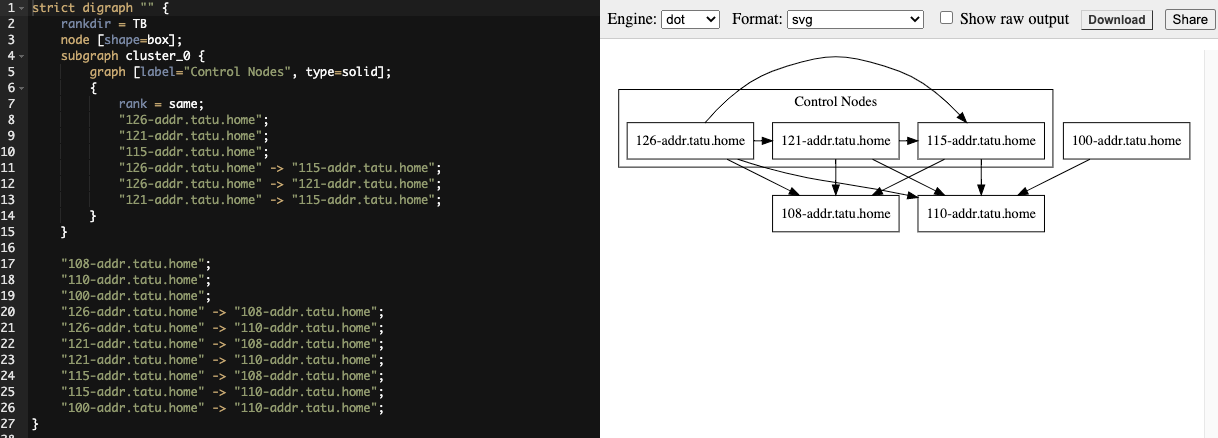

3.3. View mesh topology¶

If you configured a mesh topology, the installer can graphically validate your mesh configuration through a generated graph rendering tool. The graph is generated by reading the contents of the inventory file. See the Red Hat Ansible Automation Platform automation mesh guide for further detail.

Any given inventory file must include some sort of execution capacity that is governed by at least one control node. That is, it is unacceptable to produce an inventory file that only contains control-only nodes, execution-only nodes or hop-only nodes. There is a tightly coupled relationship between control and execution nodes that must be respected at all times. The installer will fail if the inventory files aren't properly defined. The only exception to this rule would be a single hybrid node, as it will satisfy the control and execution constraints.

In order to run jobs on an execution node, either the installer needs to pre-register the node, or user needs to make a PATCH request to /api/v2/instances/N/ to change the enabled field to true.