30. Troubleshooting the controller¶

30.1. Error logs¶

The controller server errors are logged in /var/log/tower. Supervisors logs can be found in /var/log/supervisor/. Nginx web server errors are logged in the httpd error log. Configure other controller logging needs in /etc/tower/conf.d/.

Explore client-side issues using the JavaScript console built into most browsers and report any errors to Ansible via the Red Hat Customer portal at https://access.redhat.com/.

30.2. sosreport¶

The sosreport is a utility that collects diagnostic information for Support to be able to use to analyze and investigate the issues you report. To properly provide Technical Support this information, refer to the Knowledgebase article for sosreport from the Red Hat Customer portal to perform the following procedures:

Install the sosreport utility.

Provide the sosreport to Red Hat Support.

30.3. Problems connecting to your host¶

If you are unable to run the helloworld.yml example playbook from the Quick Start Guide or other playbooks due to host connection errors, try the following:

Can you

sshto your host? Ansible depends on SSH access to the servers you are managing.Are your hostnames and IPs correctly added in your inventory file? (Check for typos.)

30.4. Unable to login to the controller via HTTP¶

Access to the controller is intentionally restricted through a secure protocol (HTTPS). In cases where your configuration is set up to run a controller node behind a load balancer or proxy as "HTTP only", and you only want to access it without SSL (for troubleshooting, for example), you must add the following settings in the custom.py file located at /etc/tower/conf.d of your controller instance:

SESSION_COOKIE_SECURE = False

CSRF_COOKIE_SECURE = False

Changing these settings to False will allow the controller to manage cookies and login sessions when using the HTTP protocol. This must be done on every node of a cluster installation to properly take effect.

To apply the changes, run:

automation-controller-service restart

30.5. WebSockets port for live events not working¶

automation controller uses port 80/443 on the controller server to stream live updates of playbook activity and other events to the client browser. These ports are configured for 80/443 by default, but if they are blocked by firewalls, close any firewall rules that opened up or added for the previous websocket ports, this will ensure your firewall allows traffic through this port.

30.6. Problems running a playbook¶

If you are unable to run the helloworld.yml example playbook from the Quick Start Guide or other playbooks due to playbook errors, try the following:

Are you authenticating with the user currently running the commands? If not, check how the username has been setup or pass the

--user=usernameor-u usernamecommands to specify a user.Is your YAML file correctly indented? You may need to line up your whitespace correctly. Indentation level is significant in YAML. You can use

yamlintto check your playbook. For more information, refer to the YAML primer at: http://docs.ansible.com/YAMLSyntax.htmlItems beginning with a

-are considered list items or plays. Items with the format ofkey: valueoperate as hashes or dictionaries. Ensure you don't have extra or missing-plays.

30.7. Problems when running a job¶

If you are having trouble running a job from a playbook, you should review the playbook YAML file. When importing a playbook, either manually or via a source control mechanism, keep in mind that the host definition is controlled by the controller and should be set to hosts: all.

30.8. Playbooks aren't showing up in the "Job Template" drop-down¶

If your playbooks are not showing up in the Job Template drop-down list, here are a few things you can check:

Make sure that the playbook is valid YML and can be parsed by Ansible.

Make sure the permissions and ownership of the project path (/var/lib/awx/projects) is set up so that the "awx" system user can view the files. You can run this command to change the ownership:

chown awx -R /var/lib/awx/projects/

30.9. Playbook stays in pending¶

If you are attempting to run a playbook Job and it stays in the "Pending" state indefinitely, try the following:

Ensure all supervisor services are running via

supervisorctl status.Check to ensure that the

/var/partition has more than 1 GB of space available. Jobs will not complete with insufficient space on the/var/partition.Run

automation-controller-service restarton the controller server.

If you continue to have problems, run sosreport as root on the controller server, then file a support request with the result.

30.10. Cancel a controller job¶

When issuing a cancel request on a currently running controller job, the controller issues a SIGINT to the ansible-playbook process. While this causes Ansible to stop dispatching new tasks and exit, in many cases, module tasks that were already dispatched to remote hosts will run to completion. This behavior is similar to pressing Ctrl-C during a command-line Ansible run.

With respect to software dependencies, if a running job is canceled, the job is essentially removed but the dependencies will remain.

30.11. Reusing an external database causes installations to fail¶

Instances have been reported where reusing the external DB during subsequent installation of nodes causes installation failures.

For example, say that you performed a clustered installation. Next, say that you needed to do this again and performed a second clustered installation reusing the same external database, only this subsequent installation failed.

When setting up an external database which has been used in a prior installation, the database used for the clustered node must be manually cleared before any additional installations can succeed.

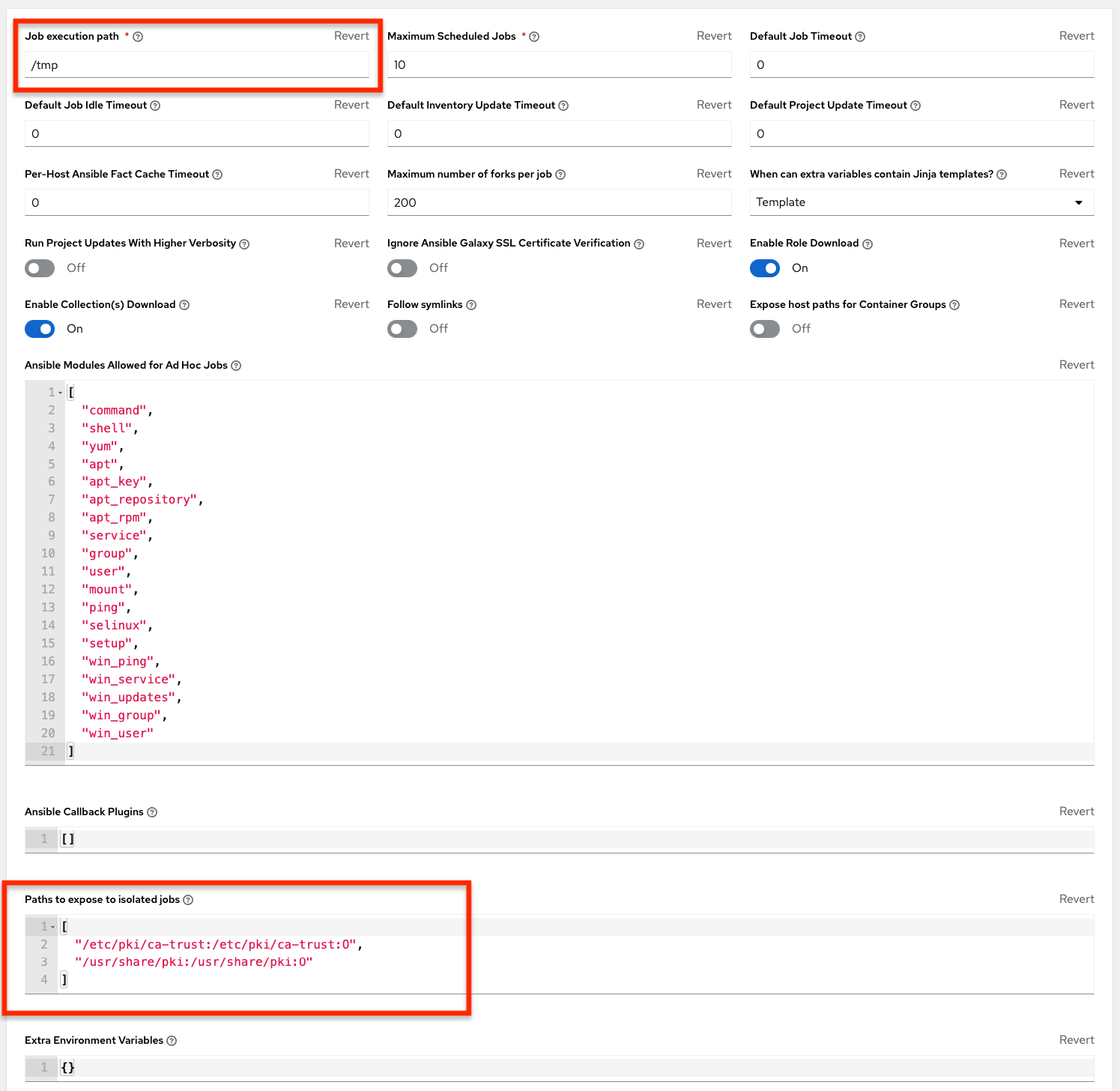

Automation controller uses container technology to isolate jobs from each other. By default, only the current project is exposed to the container running a job template.

You may find that you need to customize your playbook runs to expose additional directories. To fine tune your usage of job isolation, there are certain variables that can be set.

By default, automation controller will use the system's tmp directory (/tmp by default) as its staging area. This can be changed in the Job Execution Path field of the Jobs settings screen, or in the REST API at /api/v2/settings/jobs:

AWX_ISOLATION_BASE_PATH = "/opt/tmp"

If there are any additional directories that should specifically be exposed from the host to the container that playbooks run in, you can specify those in the Paths to Expose to Isolated Jobs

field of the Jobs setting scren, or in the REST API at /api/v2/settings/jobs:

AWX_ISOLATION_SHOW_PATHS = ['/list/of/', '/paths']

注釈

The primary file you may want to add to

AWX_ISOLATION_SHOW_PATHSis/var/lib/awx/.ssh, if your playbooks need to use keys or settings defined there.

The above fields can be found in the Jobs Settings window:

30.12. Private EC2 VPC Instances in the controller Inventory¶

By default, the controller only shows instances in a VPC that have an Elastic IP (EIP) associated with them. To see all of your VPC instances, perform the following steps:

In the controller interface, select your inventory.

Click on the group that has the Source set to AWS, and click on the Source tab.

In the

Source Variablesbox, enter:

vpc_destination_variable: private_ip_address

Next, save and then trigger an update of the group. Once this is done, you should be able to see all of your VPC instances.

注釈

The controller must be running inside the VPC with access to those instances if you want to configure them.

30.13. Troubleshooting "Error: provided hosts list is empty"¶

If you receive the message "Skipping: No Hosts Matched" when you are trying to run a playbook through the controller, here are a few things to check:

Make sure that your hosts declaration line in your playbook matches the name of your group/host in inventory exactly (these are case sensitive).

If it does match and you are using Ansible Core 2.0 or later, check your group names for spaces and modify them to use underscores or no spaces to ensure that the groups can be recognized.

Make sure that if you have specified a Limit in the Job Template that it is a valid limit value and still matches something in your inventory. The Limit field takes a pattern argument, described here: http://docs.ansible.com/intro_patterns.html

Please file a support ticket if you still run into issues after checking these options.