7. Clustering¶

Clustering is an alternate approach to redundancy, replacing the redundancy solution configured with the active-passive nodes that involves primary and secondary instances. For versions older than 3.1, refer to the older versions of this chapter of the Ansible Tower Administration Guide.

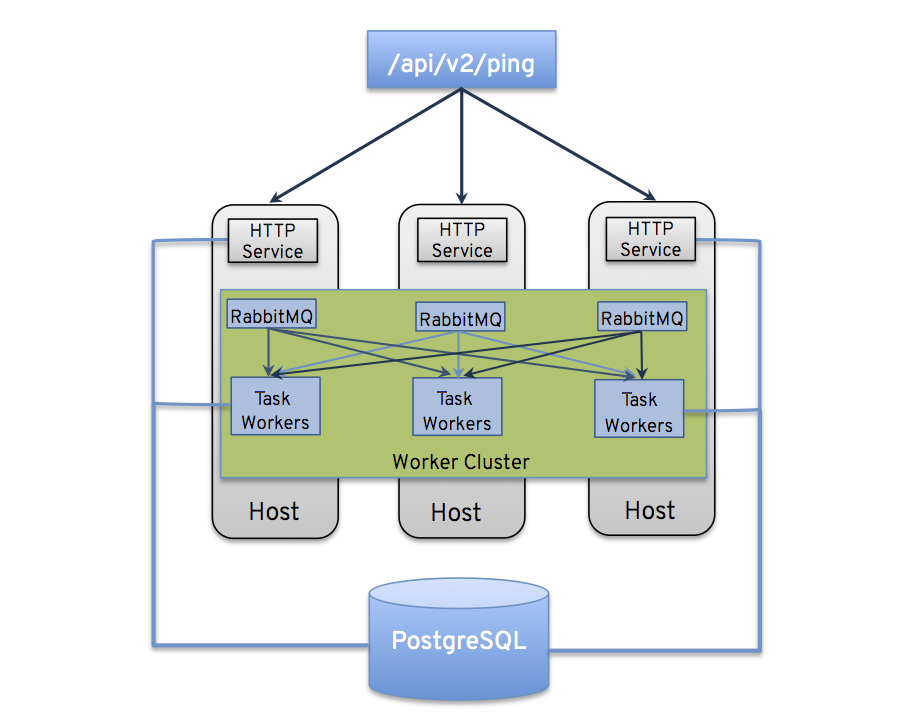

Clustering is sharing load between hosts. Each instance should be able to act as an entry point for UI and API access. This should enable Tower administrators to use load balancers in front of as many instances as they wish and maintain good data visibility.

Note

Load balancing is optional and is entirely possible to have ingress on one or all instances as needed.

Each instance should be able to join the Tower cluster and expand its ability to execute jobs. This is a simple system where jobs can and will run anywhere rather than be directed on where to run. Also, clustered instances can be grouped into different pools/queues, called Instance Groups.

Tower supports container-based clusters using OpenShift, meaning new Tower instances can be installed on this platform without any variation or diversion in functionality. However, there are certain OpenShift Deployment and Configuration differences that must be considered. In Ansible Tower version 3.6, you can create instance groups to point to a Kubernetes container. For more detail, see the Execution Environments section.

Supported Operating Systems

The following operating systems are supported for establishing a clustered environment:

Red Hat Enterprise Linux 7 or later (RHEL8 recommended, can be either RHEL 7 or Centos 7 instances)

Note

Isolated instances are not supported in conjunction with running Ansible Tower in OpenShift.

7.1. Setup Considerations¶

This section covers initial setup of clusters only. For upgrading an existing cluster, refer to Upgrade Planning of the Ansible Tower Upgrade and Migration Guide.

Important considerations to note in the new clustering environment:

PostgreSQL is still a standalone instance and is not clustered. Tower does not manage replica configuration or database failover (if the user configures standby replicas).

When spinning up a cluster, the database node should be a standalone server, and PostgreSQL should not be installed on one of the Tower nodes.

The number of instances in a cluster should always be an odd number and it is strongly recommended that a minimum of three Tower instances be in a cluster and the supported maximum is 20. When it gets that high, the requirement for odd number of nodes becomes less important.

All instances should be reachable from all other instances and they should be able to reach the database. It is also important for the hosts to have a stable address and/or hostname (depending on how the Tower host is configured).

All instances must be geographically collocated, with reliable low-latency connections between instances.

RabbitMQ is the cornerstone of Tower’s clustering system. A lot of the configuration requirements and behavior is dictated by its needs. Therefore, customization beyond Tower’s setup playbook is limited. Each Tower instance has a deployment of RabbitMQ that will cluster with the other instances’ RabbitMQ instances.

For purposes of upgrading to a clustered environment, your primary instance must be part of the

towergroup in the inventory AND it needs to be the first host listed in thetowergroup.Manual projects must be manually synced to all instances by the customer, and updated on all instances at once.

There is no concept of primary/secondary in the new Tower system. All systems are primary.

Setup playbook changes to configure RabbitMQ and provide the type of network the hosts are on.

The

inventoryfile for Tower deployments should be saved/persisted. If new instances are to be provisioned, the passwords and configuration options, as well as host names, must be made available to the installer.

7.2. Install and Configure¶

Provisioning new instances involves updating the inventory file and re-running the setup playbook. It is important that the inventory file contains all passwords and information used when installing the cluster or other instances may be reconfigured. The current standalone instance configuration does not change for a 3.1 or later deployment. The inventory file does change in some important ways:

Since there is no primary/secondary configuration, those inventory groups go away and are replaced with a single inventory group,

tower.

Note

All instances are responsible for various housekeeping tasks related to task scheduling, like determining where jobs are supposed to be launched and processing playbook events, as well as periodic cleanup.

[tower]

hostA

hostB

hostC

[instance_group_east]

hostB

hostC

[instance_group_west]

hostC

hostD

Note

If no groups are selected for a resource then the tower group is used, but if any other group is selected, then the tower group will not be used in any way.

The database group remains for specifying an external PostgreSQL. If the database host is provisioned separately, this group should be empty:

[tower]

hostA

hostB

hostC

[database]

hostDB

It is common to provision Tower instances externally, but it is best to reference them by internal addressing. This is most significant for RabbitMQ clustering where the service is not available at all on an external interface. For this purpose, it is necessary to assign the internal address for RabbitMQ links as such:

[tower]

hostA rabbitmq_host=10.1.0.2

hostB rabbitmq_host=10.1.0.3

hostC rabbitmq_host=10.1.0.4

Note

The number of instances in a cluster should always be an odd number and it is strongly recommended that a minimum of three Tower instances be in a cluster and the supported maximum is 20. When it gets that high, the requirement for odd number of nodes becomes less important.

The

redis_passwordfield is removed from[all:vars]Fields for RabbitMQ are as follows:

rabbitmq_port=5672: RabbitMQ is installed on each instance and is not optional, it is also not possible to externalize it. This setting configures what port it listens on.rabbitmq_username=towerandrabbitmq_password=tower: Each instance and each instance’s Tower instance are configured with these values. This is similar to Tower’s other uses of usernames/passwords.rabbitmq_cookie=<somevalue>: This value is unused in a standalone deployment but is critical for clustered deployments. This acts as the secret key that allows RabbitMQ cluster members to identify each other.rabbitmq_enable_manager: Set this totrueto expose the RabbitMQ Management Web Console on each instance.

7.2.1. RabbitMQ Default Settings¶

The following configuration shows the default settings for RabbitMQ:

rabbitmq_port=5672

rabbitmq_username=tower

rabbitmq_password=''

rabbitmq_cookie=cookiemonster

Note

rabbitmq_cookie is a sensitive value, it should be treated like the secret key in Tower.

7.2.2. Instances and Ports Used by Tower¶

Ports and instances used by Tower are as follows:

80, 443 (normal Tower ports)

22 (ssh)

5432 (database instance - if the database is installed on an external instance, needs to be opened to the tower instances)

Clustering/RabbitMQ ports:

4369, 25672 (ports specifically used by RabbitMQ to maintain a cluster, needs to be open between each instance)

15672 (if the RabbitMQ Management Interface is enabled, this port needs to be opened (optional))

7.2.3. Optional SSH Authentication¶

For users who wish to manage SSH authentication from “controller” nodes to “isolated” nodes via some system outside of Tower (such as externally-managed passwordless SSH keys), this behavior can be disabled by unsetting two Tower API settings values:

HTTP PATCH /api/v2/settings/jobs/ {'AWX_ISOLATED_PRIVATE_KEY': '', 'AWX_ISOLATED_PUBLIC_KEY': ''}

7.3. Status and Monitoring via Browser API¶

Tower itself reports as much status as it can via the Browsable API at /api/v2/ping in order to provide validation of the health of the cluster, including:

The instance servicing the HTTP request

The timestamps of the last heartbeat of all other instances in the cluster

Instance Groups and Instance membership in those groups

View more details about Instances and Instance Groups, including running jobs and membership information at /api/v2/instances/ and /api/v2/instance_groups/.

7.4. Instance Services and Failure Behavior¶

Each Tower instance is made up of several different services working collaboratively:

HTTP Services - This includes the Tower application itself as well as external web services.

Callback Receiver - Receives job events from running Ansible jobs.

Dispatcher - The worker queue that processes and runs all jobs.

RabbitMQ - This message broker is used as a signaling mechanism for the dispatcher as well as any event data propagated to the application.

Memcached - local caching service for the instance it lives on.

Tower is configured in such a way that if any of these services or their components fail, then all services are restarted. If these fail sufficiently often in a short span of time, then the entire instance will be placed offline in an automated fashion in order to allow remediation without causing unexpected behavior.

For backing up and restoring a clustered environment, refer to Backup and Restore for Clustered Environments section.

7.5. Job Runtime Behavior¶

The way jobs are run and reported to a ‘normal’ user of Tower does not change. On the system side, some differences are worth noting:

When a job is submitted from the API interface it gets pushed into the dispatcher queue on RabbitMQ. A single RabbitMQ instance is the responsible master for individual queues but each Tower instance will connect to and receive jobs from that queue using a particular scheduling algorithm. Any instance in the cluster is just as likely to receive the work and execute the task. If a instance fails while executing jobs, then the work is marked as permanently failed.

Project updates behave differently in Ansible Tower 3.6. In previous versions, project updates were ordinary jobs that ran on a single instance. It is now important that they run successfully on any instance that could potentially run a job. Projects will sync themselves to the correct version on the instance immediately prior to running the job. If the needed revision is already locally checked out and galaxy or collections updates are not needed, then a sync may not be performed.

When the sync happens, it is recorded in the database as a project update with a

launch_type = syncandjob_type = run. Project syncs will not change the status or version of the project; instead, they will update the source tree only on the instance where they run.

7.5.1. Job Runs¶

By default, when a job is submitted to the tower queue, it can be picked up by any of the workers. However, you can control where a particular job runs, such as restricting the instances from which a job runs on.

In order to support temporarily taking an instance offline, there is a property enabled defined on each instance. When this property is disabled, no jobs will be assigned to that instance. Existing jobs will finish, but no new work will be assigned.

7.6. Deprovision Instances¶

Re-running the setup playbook does not automatically deprovision instances since clusters do not currently distinguish between an instance that was taken offline intentionally or due to failure. Instead, shut down all services on the Tower instance and then run the deprovisioning tool from any other instance:

Shut down the instance or stop the service with the command,

ansible-tower-service stop.Run the deprovision command

$ awx-manage deprovision_instance --hostname=<name used in inventory file>from another instance to remove it from the Tower cluster registry AND the RabbitMQ cluster registry.Example:

awx-manage deprovision_instance --hostname=hostB

Similarly, deprovisioning instance groups in Tower does not automatically deprovision or remove instance groups. For more information, refer to the Deprovision Instance Groups section.