22. Tower Tips and Tricks¶

22.1. Using the Tower CLI Tool¶

Ansible Tower has a full-featured command line interface. It communicates with Tower via Tower’s REST API. You can install it from any machine with access to your Tower machine, or on Tower itself.

Installation can be done using the pip command:

pip install ansible-tower-cli

Refer to Introduction to tower-cli and https://github.com/ansible/tower-cli/blob/master/README.rst for configuration and usage instructions.

22.2. Launching a Job Template via the API¶

Ansible Tower makes it simple to launch a job based on a Job Template from Tower’s API or by using the tower-cli command line tool.

Launching a Job Template also:

- Creates a Job Record

- Gives that Job Record all of the attributes on the Job Template, combined with certain data you can give in this launch endpoint (“runtime” data)

- Runs Ansible with the combined data from the JT and runtime data

Runtime data takes precedence over the Job Template data, contingent on the ask_ _on_launch field on the job template being set to True. For example, a runtime credential is only accepted if the Job Template has ask_credential_on_launch set to True.

Launching from Job Templates via the API follows the following workflow:

GET

https://your.tower.server/api/v2/job_templates/<your job template id>/launch/. The response will contain data such asjob_template_dataanddefaultswhich give information about the job template.Inspect returned data for runtime data that is needed to launch. Inspecting the OPTIONS of the launch endpoint may also help deduce what POST fields are allowed.

Warning

Providing certain runtime credentials could introduce the need for a password not listed in

passwords_needed_to_start.passwords_needed_to_start: List of passwords neededcredential_needed_to_start: Booleaninventory_needed_to_start: Booleanvariables_needed_to_start: List of fields that need to be passed inside of theextra_varsdictionary

Inspect returned data for optionally allowed runtime data that the user should be asked for.

ask_variables_on_launch: Boolean specifying whether to prompt the user for additional variables to pass to Ansible inside of extra_varsask_tags_on_launch: Boolean specifying whether to prompt the user forjob_tagson launch (allow allows use ofskip_tagsfor convienience)ask_job_type_on_launch: Boolean specifying whether to prompt the user forjob_typeon launchask_limit_on_launch: Boolean specifying whether to prompt the user forlimiton launchask_inventory_on_launch: Boolean specifying whether to prompt the user for the related fieldinventoryon launchask_credential_on_launch: Boolean specifying whether to prompt the user for the related fieldcredentialon launchsurvey_enabled: Boolean specifying whether to prompt the user for additionalextra_vars, following the job template’ssurvey_specQ&A format

POST

https://your.tower.server/api/v2/job_templates/<your job template id>/launch/with any required data gathered during the previous step(s). The variables that can be passed in the request data for this action include the following.extra_vars: A string that represents a JSON or YAML formatted dictionary (with escaped parentheses) which includes variables given by the user, including answers to survey questionsjob_tags: A string that represents a comma-separated list of tags in the playbook to runlimit: A string that represents a comma-separated list of hosts or groups to operate oninventory: A integer value for the foreign key of an inventory to use in this job runcredential: A integer value for the foreign key of a credential to use in this job run

The POST will return data about the job and information about whether the runtime data was accepted. The job id is given in the job field to maintain compatibility with tools written before 3.0. The response will look similar to:

{

"ignored_fields": {

"credential": 2,

"job_tags": "setup,teardown"

}

"id": 4,

...more data about the job...

"job": 4,

}

In this example, values for credential and job_tags were given while the job template ask_credential_on_launch and ask_tags_on_launch were False. These were rejected because the job template author did not allow using runtime values for them.

You can see details about the job in this response. To get an updated status, you will need to do a GET request to the job page, /jobs/4, or follow the url link in the response. You can also find related links to cancel, relaunch, and so fourth.

Note

When querying a job on a non-execution node, an error message, stdout capture is missing displays for the result_stdout field and on the related stdout page. In order to generate the stdout, use the format=txt_download query parameter for the related stdout page. This generates the stdout file and any refreshes to either the job or the related std will display the job output.

Note

You cannot assign a new inventory at the time of launch to a scan job. Scan jobs must be tied to a fixed inventory.

Note

You cannot change the Job Type at the time of launch to or from the type of “scan”. The ask_job_type_on_launch option only enables you to toggle “run” versus “check” at launch time.

22.3. tower-cli Job Template Launching¶

From the Tower command line, you can use tower-cli as a method of launching your Job Templates.

For help with tower-cli launch, use:

tower-cli job launch --help.

For launching from a job template, invoke tower-cli in a way similar to:

For an example of how to use the API, you can also add the -v flag here:

tower-cli job launch --job-template=4 -v

22.4. Changing the Tower Admin Password¶

During the installation process, you are prompted to enter an administrator password which is used for the admin superuser/first user created in Tower. If you log into the instance via SSH, it will tell you the default admin password in the prompt. If you need to change this password at any point, run the following command as root on the Tower server:

awx-manage changepassword admin

Next, enter a new password. After that, the password you have entered will work as the admin password in the web UI.

22.5. Creating a Tower Admin from the commandline¶

Once in a while you may find it helpful to create an admin (superuser) account from the commandline. To create an admin, run the following command as root on the Tower server and enter in the admin information as prompted:

awx-manage createsuperuser

22.6. Setting up a jump host to use with Tower¶

Credentials supplied by Tower will not flow to the jump host via ProxyCommand. They are only used for the end-node once the tunneled connection is set up.

To make this work, configure a fixed user/keyfile in the AWX user’s SSH config in the ProxyCommand definition that sets up the connection through the jump host. For example:

Host tampa

Hostname 10.100.100.11

IdentityFile [privatekeyfile]

Host 10.100..

Proxycommand ssh -W [jumphostuser]@%h:%p tampa

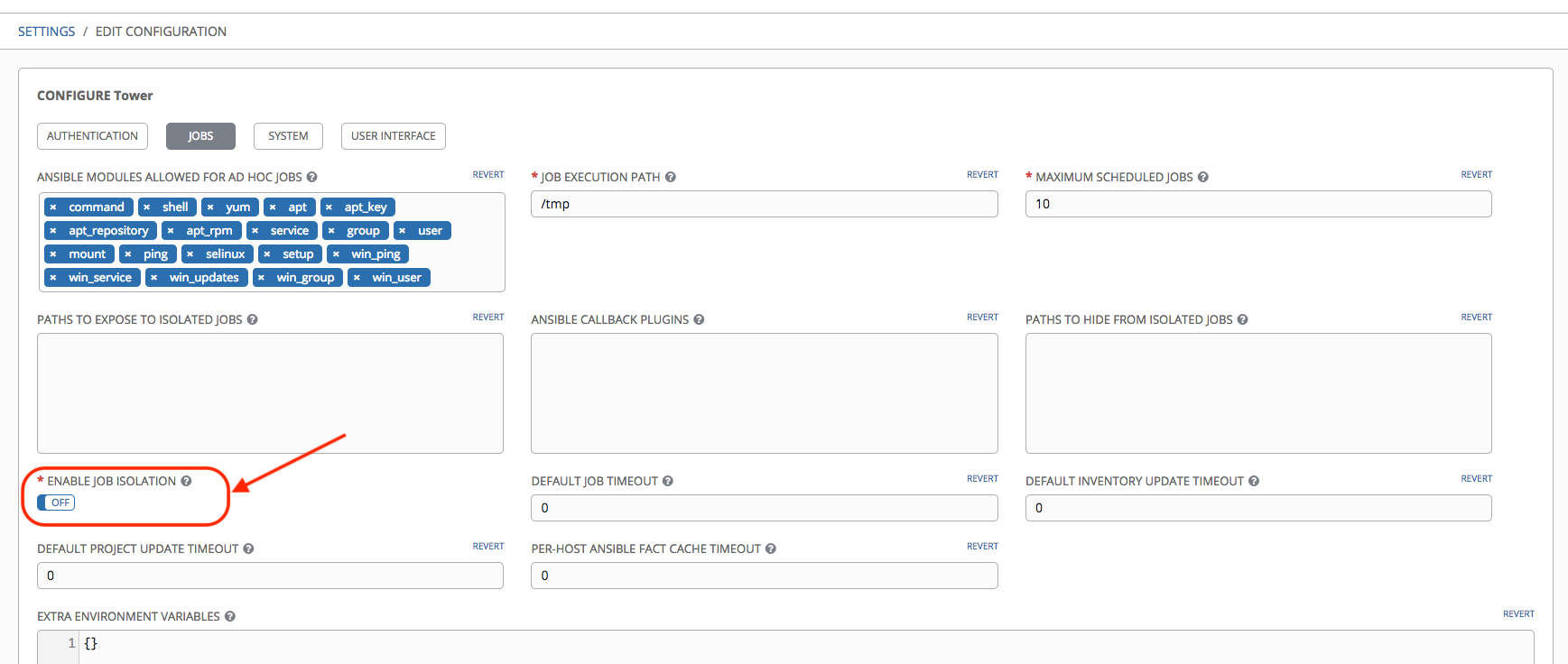

Note

You must disable PRoot by default if you need to use a jump host. You can disable PRoot through the Configure Tower user interface by setting the Enable Job Isolation toggle to OFF from the Jobs tab:

22.7. View Ansible outputs for JSON commands when using Tower¶

When working with Ansible Tower, you can use the API to obtain the Ansible outputs for commands in JSON format.

To view the Ansible outputs, browse to:

https://<tower server name>/api/v2/jobs/<job_id>/job_events/

22.8. Locate and configure the Ansible configuration file¶

While Ansible does not require a configuration file, OS packages often include a default one in /etc/ansible/ansible.cfg for possible customization. In order to use a custom ansible.cfg file, place it at the root of your project. Ansible Tower runs ansible-playbook from the root of the project directory, where it will then find the custom ansible.cfg file. An ansible.cfg anywhere else in the project will be ignored.

To learn which values you can use in this file, refer to the configuration file on github.

Using the defaults are acceptable for starting out, but know that you can configure the default module path or connection type here, as well as other things.

Tower overrides some ansible.cfg options. For example, Tower stores the SSH ControlMaster sockets, the SSH agent socket, and any other per-job run items in a per-job temporary directory, secured by multi-tenancy access control restrictions via PRoot.

22.9. View a listing of all ansible_ variables¶

Ansible by default gathers “facts” about the machines under its management, accessible in Playbooks and in templates. To view all facts available about a machine, run the setup module as an ad hoc action:

ansible -m setup hostname

This prints out a dictionary of all facts available for that particular host. For more information, refer to: https://docs.ansible.com/ansible/playbooks_variables.html#information-discovered-from-systems-facts

22.10. Using virtualenv with Ansible Tower¶

Ansible Tower 3.0 and later uses virtualenv. Virtualenv creates isolated Python environments to avoid problems caused by conflicting dependencies and differing versions. Virtualenv works by simply creating a folder which contains all of the necessary executables and dependencies for a specific version of Python. Ansible Tower creates two virtualenvs during installation–one is used to run Tower, while the other is used to run Ansible. This allows Tower to run in a stable environment, while allowing you to add or update modules to your Ansible Python environment as necessary to run your playbooks. For more information on virtualenv, see the Python Guide to Virtual Environments.

Note

It is highly recommended that you run umask 0022 before installing any packages to the virtual environment, such a python package after initial install. Failure to properly configure permissions can result in Tower service failures. An example as follows:

# source /var/lib/awx/venv/ansible/bin/activate

# umask 0022

# pip install --upgrade pywinrm

# deactivate

22.10.1. Modifying the virtualenv¶

Modifying the virtualenv used by Tower is unsupported and not recommended. Instead, you can add modules to the virtualenv that Tower uses to run Ansible.

To do so, activate the Ansible virtualenv:

. /var/lib/awx/venv/ansible/bin/activate

...and then install whatever you need using pip:

pip install mypackagename

22.11. Configuring the towerhost hostname for notifications¶

In /etc/tower/settings.py, you can modify TOWER_URL_BASE='https://tower.example.com' to change the notification hostname, replacing https://tower.example.com with your preferred hostname. You must restart Tower services after saving your changes with ansible-tower-service restart.

Refreshing your Tower license also changes the notification hostname. New installations of Ansible Tower 3.0 should not have to set the hostname for notifications.

22.12. Launching Jobs with curl¶

Note

Tower now offers a full-featured command line interface called tower-cli which may be of interest to you if you are considering using curl.

This method works with Tower versions 2.1.x and newer.

Launching jobs with the Tower API is simple. Here are some easy to follow examples using the curl tool.

Assuming that your Job Template ID is ‘1’, your Tower IP is 192.168.42.100, and that admin and awxsecret are valid login credentials, you can create a new job this way:

curl -f -k -H 'Content-Type: application/json' -XPOST \

--user admin:awxsecret \

http://192.168.42.100/api/v2/job_templates/1/launch/

This returns a JSON object that you can parse and use to extract the ‘id’ field, which is the ID of the newly created job.

You can also pass extra variables to the Job Template call, such as is shown in the following example:

curl -f -k -H 'Content-Type: application/json' -XPOST \

-d '{"extra_vars": "{\"foo\": \"bar\"}"}' \

--user admin:awxsecret http://192.168.42.100/api/v2/job_templates/1/launch/

You can view the live API documentation by logging into http://192.168.42.100/api/ and browsing around to the various objects available.

Note

The extra_vars parameter needs to be a string which contains JSON, not just a JSON dictionary, as you might expect. Use caution when escaping the quotes, etc.

22.13. Dynamic Inventory and private IP addresses¶

By default, Tower only shows instances in a VPC that have an Elastic IP (EIP) address associated with them. To view all of your VPC instances, perform the following steps:

- In the Tower interface, select your inventory.

- Click on the group that has the Source set to AWS, and click on the Source tab.

- In the “Source Variables” box, enter:

vpc_destination_variable: private_ip_address

Save and trigger an update of the group. You should now be able to see all of your VPC instances.

Note

Tower must be running inside the VPC with access to those instances in order to usefully configure them.

22.14. Filtering instances returned by the dynamic inventory sources in Tower¶

By default, the dynamic inventory sources in Tower (AWS, Rackspace, etc) return all instances available to the cloud credentials being used. They are automatically joined into groups based on various attributes. For example, AWS instances are grouped by region, by tag name and value, by security groups, etc. To target specific instances in your environment, write your playbooks so that they target the generated group names. For example:

---

- hosts: tag_Name_webserver

tasks:

...

You can also use the Limit field in the Job Template settings to limit a playbook run to a certain group, groups, hosts, or a combination thereof. The syntax is the same as the --limit parameter on the ansible-playbook command line.

You may also create your own groups by copying the auto-generated groups into your custom groups. Make sure that the Overwrite option is disabled on your dynamic inventory source, otherwise subsequent synchronization operations will delete and replace your custom groups.

22.15. Using an unreleased module from Ansible source with Tower¶

If there is a feature that is available in the latest Ansible core branch that you would like to leverage with your Tower system, making use of it in Tower is fairly simple.

First, determine which is the updated module you want to use from the available Ansible Core Modules or Ansible Extra Modules GitHub repositories.

Next, create a new directory, at the same directory level of your Ansible source playbooks, named /library.

Once this is created, copy the module you want to use and drop it into the /library directory–it will be consumed first over your system modules and can be removed once you have updated the the stable version via your normal package manager.

22.16. Using callback plugins with Tower¶

Ansible has a flexible method of handling actions during playbook runs, called callback plugins. You can use these plugins with Tower to do things like notify services upon playbook runs or failures, send emails after every playbook run, etc. For official documentation on the callback plugin architecture, refer to: http://docs.ansible.com/developing_plugins.html#callbacks

Note

Ansible Tower does not support the stdout callback plugin because Ansible only allows one, and it is already being used by Ansible Tower for streaming event data.

You may also want to review some example plugins, which should be modified for site-specific purposes, such as those available at: https://github.com/ansible/ansible/tree/devel/lib/ansible/plugins/callback

To use these plugins, put the callback plugin .py file into a directory called /callback_plugins alongside your playbook in your Tower Project.

To make callbacks apply to every playbook, independent of any projects, put the plugins .py file in one of the following directories (depending on your particular Linux distribution and method of Ansible installation):

* /usr/lib/pymodules/python2.7/ansible/callback_plugins

* /usr/local/lib/python2.7/dist-packages/ansible/callback_plugins

* /usr/lib/python2.6/site-packages/ansible/callback_plugins

Note

To have most callbacks shipped with Ansible applied globally, you must add them to the callback_whitelist section of your ansible.cfg.

22.17. Connecting to Windows with winrm¶

By default Tower attempts to ssh to hosts. You must add the winrm connection info to the group variables to which the Windows hosts belong. To get started, edit the Windows group in which the hosts reside and place the variables in the source/edit screen for the group.

To add winrm connection info:

Edit the properties for the selected group by clicking on the  button to the right of the group name that contains the Windows servers. In the “variables” section, add your connection information as such:

button to the right of the group name that contains the Windows servers. In the “variables” section, add your connection information as such: ansible_connection: winrm

Once done, save your edits. If Ansible was previously attempting an SSH connection and failed, you should re-run the job template.

22.18. Importing existing inventory files and host/group vars into Tower¶

To import an existing static inventory and the accompanying host and group vars into Tower, your inventory should be in a structure that looks similar to the following:

inventory/

|-- group_vars

| `-- mygroup

|-- host_vars

| `-- myhost

`-- hosts

To import these hosts and vars, run the awx-manage command:

awx-manage inventory_import --source=inventory/ \

--inventory-name="My Tower Inventory"

If you only have a single flat file of inventory, a file called ansible-hosts, for example, import it like the following:

awx-manage inventory_import --source=./ansible-hosts \

--inventory-name="My Tower Inventory"

In case of conflicts or to overwrite an inventory named “My Tower Inventory”, run:

awx-manage inventory_import --source=inventory/ \

--inventory-name="My Tower Inventory" \

--overwrite --overwrite-vars

If you receive an error, such as:

ValueError: need more than 1 value to unpack

Create a directory to hold the hosts file, as well as the group_vars:

mkdir -p inventory-directory/group_vars

Then, for each of the groups that have :vars listed, create a file called inventory-directory/group_vars/<groupname> and format the variables in YAML format.

Once broken out, the importer will handle the conversion correctly.